Pharma 4.0: Building Quality Into Pharma Manufacturing, From Molecule To Medicine

By Bikash Chatterjee, CEO, Pharmatech Associates

A race is being run to create the pharmaceutical manufacturing of the future and with Pharma 4.0, powerful market trends are shaping the running field. Fueled by a growing global marketplace, tempered by the ever-present need for pharmaceutical manufacturers to remain competitive, and heated by escalating complexity as regulators push for continuous product monitoring, we are living in a period where many elements are about to change. Economic progress in the BRIC nations (Brazil, Russia, India, and China) has expanded market opportunity and added greater complexity to developing and marketing safe and efficacious drugs across the global supply chain.

A race is being run to create the pharmaceutical manufacturing of the future and with Pharma 4.0, powerful market trends are shaping the running field. Fueled by a growing global marketplace, tempered by the ever-present need for pharmaceutical manufacturers to remain competitive, and heated by escalating complexity as regulators push for continuous product monitoring, we are living in a period where many elements are about to change. Economic progress in the BRIC nations (Brazil, Russia, India, and China) has expanded market opportunity and added greater complexity to developing and marketing safe and efficacious drugs across the global supply chain.

Healthcare expenditure per capita is set to rise from its 2017 level of $1,137 to $1,427 by 2021. To many, this trend is unsustainable, and if industry does not set its sights on cost containment while managing business performance, there will be a severe reckoning in the marketplace.

Drug Development Challenges

Meanwhile, pharmaceutical companies are locked in a perpetual race against time to innovate and bring these new drug therapies to market as quickly and cost-effectively as possible. Innovator companies find their patent protection eroding and, while a patent can provide a company intellectual property protection for 20 years or more, more than half of that time will be spent turning the ideas embedded in an individual patent into a marketable product, leaving only a few years to recover the often billions spent in development. Combine this with a development engine in which only 13 percent of the drugs developed ever reach the market,1 and the need to improve the current model could not be more self-evident.

Industry 4.0: Evolution

The term “Industry 4.0” was coined by the German federal government in 2011 in a national strategy to promote computerized manufacturing. The 4.0 designation was a play on software version control and represents the fourth evolution of the industrial revolution. The previous three industrial revolutions are shown in Figure 1 and described as follows:

- Industry 1.0 refers to the first industrial revolution. It is marked by a transition from hand production methods to machines, using steam power and waterpower.

- Industry 2.0 is the second industrial revolution, better known as the technological revolution. It was made possible with the extensive railroad networks and the telegraph that allowed for faster transfer of people and ideas. It is also marked by ever more present electricity that allowed for factory electrification and the modern production line. It is also a period of great economic growth, with an increase in productivity.

- Industry 3.0 occurred in the late 20th century, after the end of the two world wars, as a result of a slowdown of industrialization and technological advancement. It is also called the digital revolution, characterized by extensive use of computer and communication technologies in the production process.

Industry 4.0 is based upon the emergence of four technologies that are disrupting the manufacturing sector: the astonishing rise in data volumes, computational power and connectivity, especially new low-power wide-area networks; the emergence of analytics and business-intelligence capabilities; new forms of human-machine interaction such as touch interfaces and augmented-reality systems; and improvements in transferring digital instructions to the physical world, such as advanced robotics and 3-D printing.

Pharma 4.0

The goal of Pharma 4.0 is to create the intelligence needed for engineers and operators to make smarter decisions that increase operational efficiencies, improve yield and engineering productivity, and substantially drive business performance. Pharma 4.0 applies Industry 4.0 concepts to the pharmaceutical setting. Within modular structured smart factories, cyber-physical systems monitor physical processes, create a virtual copy of the physical world, and help make decentralized decisions. With the connected devices of the Internet of Things (IoT), cyber-physical systems communicate and interoperate with each other — and with humans — for real-time control and data collection that contributes usable information shared among participants of the overall pharma manufacturing value chain.

The concept behind achieving this enhanced business performance revolves around three basic elements:

- Broad deployment of IoT: Gathering data from across the global supply chain via smart sensors and smart devices;

- Engineering systems: Data are integrated with intelligence to detect, analyze, and predict outcomes to everyday manufacturing challenges;

- Integrated intelligence: Enterprise-wide intelligence where all data, including enterprise-level systems, are completely interconnected across the entire ecosystem.

The objectives of Pharma 4.0 are ambitious in that the intent is to make the leap from a reactive framework, historically achieved using automation strategies and technologies, to a predictive framework based upon analytics to allow us to anticipate and address potential challenges in the overall supply chain. While the focus of Pharma 4.0 is the manufacturing supply chain, the principles are being applied in a much broader fashion across the entire drug development life cycle.

Internet Of Things And Data Management

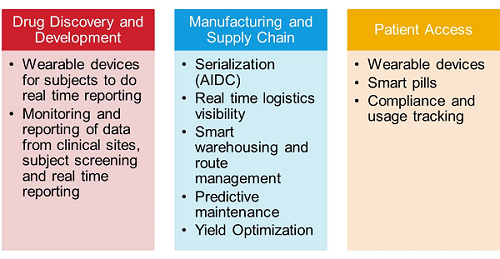

The IoT is one area where we are seeing an expansion of the principles as early as drug discovery. Table 1 below summarizes some of the key areas where IoT is deployed across the drug development life cycle and supply chain, extending from drug discovery all the way to post-commercial pharmacovigilance.

Table 1: Applications of IoT across the Drug Development Life Cycle and Supply Chain

Supply chain visibility remains a very big challenge for pharma and biotech. The ability to anticipate failures or address excursions in real time has always been the end game. Like any process, the supply chain has its own unique sources of variability. Whether that is a result of human interaction or mechanical failure, the ability to monitor, measure, and ultimately predict excursions that are not part of the normal process control requires real-time or near real-time measurement capability. IoT solutions today include sensor network technology coupled with intelligent data analysis. Compliance with the FDA’s Unique Device Identifier (UDI) system and the Drug Supply Chain Security Act (DSCSA) is a significant driver for deploying IoT within the supply chain. Manufacturers, including both drug sponsors and contract manufacturing organizations (CMOs), needed to comply with the act by November 2018, a delay of one year from the original target. Compliance was defined as an implemented solution to create a unique Global Trades Item Number (GTIN), serial number, lot number, expiry date in human readable format, and GS1 compliant data matrix code. Looking only at the U.S. market, this is a significant technical challenge, especially from a database management perspective. When you look at a global marketplace and supply chain in which more than 70 different serialization standards and regulations exist, it is easy to see how a patchwork solution architecture would not be viable in the long term.

Accessing Data, Unlocking Information

Much of pharma’s data today are trapped in isolated islands of automation and databases. This has been one of the first challenges faced by the industry in attempting to step into Big Data analytics. Disparate data captured in separate database silos dramatically complicates any predictive analysis and severely restricts the potential for any new and innovative analysis. If the goal is to have a complete 360--degree view of all relevant data and its relationship to each other across your business, patients, supply chain, and development pipeline, then we need an architecture that can easily handle all types of data. With data in silos and despite many attempts at solving it, the problem has become worse, not better. Data integration has proven to be the most challenging problem in IT, and existing data integration products and strategies are not working. Most organizations have a similar-looking architecture — a bunch of operational “run the business” systems, utilizing a suite of extract, transform, and load (ETL) tools to feed data in order to “observe the business” data warehouses. In recent years, new sources of data like IoT feeds, message feeds, artificial intelligence (AI), and machine earning tools have made the problem more complicated.

Today, ontological databases have matured to a point where they can address the overwhelming challenges of managing and analyzing siloed, disparate data. There are technical solutions that let you bring the data in “as is,” curate it, apply security and governance, and make it accessible for analysis as needed. These solutions are flexible enough to avoid having to model everything at once, and you don’t have to change it manually every time the data or your business needs change (it is done instead using ETL). These platforms are designed to give the business, architect, and developer what they all want, which is:

- The ability to load data as is – Instead of waiting on complex ETL, data ingestion is immediate. There is no need to define a schema in advance.

- Unified platform – Instead of stitching together a bunch of separate products, everything is already unified in an integrated and single platform that provides a consistent, real-time view of data.

- Smart curation – Instead of worrying about mapping schemas together, integrating a master data management (MDM) tool, writing custom algorithms, etc., these solutions have integrated tools designed to manage the curated data.

- Advanced security – Instead of disjointed data lineage, the database tracks that metadata right alongside the data itself. Instead of worrying whether to lock data up or risk sharing it, these systems have highly granular, tight control over exactly what data gets shared with whom.

- Simple development – Instead of waiting for ETL to complete or learning some proprietary language, developers start building data services application program interfaces (APIs) as soon as the initial data is loaded.

These systems now provide pharma with the potential for a single portal and interface to all potential data across the entire business value chain. Most importantly, it doesn’t require disassembling any of the solutions that have been put in place. The reluctance to migrate away from legacy systems is one of the biggest organizational hurdles faced by cross-functional data management initiatives. Look for Big Data initiatives within the industry to shift to these solutions in the next three to five years.

Artificial Intelligence (AI)

Few areas of innovation have had as broad a potential impact as AI. If you think of the IoT as connecting devices in order to gather data, then AI makes the decisions based upon that data. As such, the applicability of AI is not limited to the shop floor or the manufacturing supply chain.

Potential applications of AI span the spectrum from drug discovery and molecule identification to post-approval pharmacovigilance. Almost every major market in the world and many secondary countries have formal AI strategies in place or underway.

Broadly speaking, the term AI applies to any technique that enables computers to mimic human intelligence. To fully understand the applicability of AI, it is important to look closer at the two subsets of AI: machine learning and deep learning. Machine learning is exactly what it sounds like – the application of targeted statistical techniques that enable machines to improve upon tasks with experience. Machine learning has been used in combination with well-established techniques such as “fuzzy logic” to build a set of rules that allows equipment to consistently improve its performance against a predefined set of objectives as it gathers data. Facial recognition is one example of this application.

Machine learning is not only being used on the shop floor to optimize performance; it is being applied in drug discovery to improve the success rates of new drug therapies and drug modalities as they move through the clinical pipeline. An MIT1 study published in April 2019 concluded after analyzing more than 21,000 clinical trials between 2000 and 2015 that only 13.8 percent of drugs successfully pass clinical trials. It would not be hard to say this is not a sustainable performance in the face of the downward pricing pressures across the globe. One large pharma organization is using machine learning to improve its molecule selection process. By building large libraries of digital images of cells treated with different experimental compounds, it is using machine learning algorithms to screen potential compounds faster with a higher rate of success.

Another potential blockbuster application of AI is the treatment of complex diseases that have multiple modes and mechanisms of action, such as autoimmune diseases like multiple sclerosis (MS) and ALS. Typically, current research targets one gene anomaly or defect. Using AI, it may be possible to identify multiple genes that influence the disease and devise drug therapies against multiple targets.

Another interesting application of AI is happening in terms of clinical treatment. Some cancer treatments are toxic, requiring adjusting the dose to maximal delivery as the patient’s treatment progresses. This is called dynamic dosing, and it is a complex treatment regimen. AI can be used to continuously identify the optimal doses of each drug to result in a durable response, giving each individual patient the ability to live a cancer-free and healthy life.

Deep Learning

Deep learning is the other subset of AI, composed of algorithms that permit software to train itself to perform tasks, like speech and image recognition, by exposing multilayered neural networks to vast amounts of data. One of the areas ripe for deep learning lies in establishing the capability to easily collect natural language-derived data, such as, for example, evaluating patients against the inclusion/exclusion criteria for clinical trials. Identifying patients who satisfy the inclusion criteria is one of the key aspects of constructing a viable controlled clinical study, and for most clinical studies, any time recovered in the enrollment timeline can translate directly to time-to-market. Patients just need to answer a few simple questions on its search platform and they will receive a list of suggested studies they may be eligible for. Usually, when drug developers submit details of their new trial, most of it gets entered as structured data in formats such as drop-down menus. This data is easy to record and analyze by computers. However, patients’ eligibility criteria get entered into free text fields where they can write anything they like. Traditionally, interpreting this data was nearly impossible for a computer to “understand.” AI, specifically deep learning algorithms, can read this unstructured data, so the computer can assign appropriate clinical trials.

Extending this concept to the treatment of patients, AI is being applied to analyze structured and unstructured clinical data, including doctors’ notes and other free-text documents. Clinical data is separated into key elements while also protecting sensitive health information. The AI application then extracts thousands of these clinical data points to create a multidimensional profile. Doctors and researchers can then use these profiles to find suitable candidates for a clinical trial.

Blockchain

Blockchain’s lineage is in cryptocurrency. The primary requirement for buying and selling cryptocurrency is security, not speed or efficiency. Blockchain creates a digital ledger of all transactions that may take place in the supply chain. The application of blockchain in pharma is still in the investigative phases. One application that is being adopted by the global supply chain is the concept of smart contracts. A smart contract is a computer protocol intended to digitally facilitate, verify, or enforce the negotiation or performance of a contract without third parties. In this format, contracts could be converted to computer code, stored and replicated on the system, then supervised by the network of computers that run the blockchain. This would also result in ledger feedback such as transferring money and receiving the product or service. International organizations, including pharma, governments, and banks, are turning to blockchain to ensure and enforce the terms of a contract.

Several areas where blockchain has shown utility include:

- Verifying the authenticity of returned drugs.

- Utilizing the DSCSA serialization requirements and medical device UDIs to establish a digital provenance and chain of custody for drugs and devices – addressing the counterfeit problem worldwide.

- Enforcing supply chain logistics requirements. The fully integrated enterprise, utilizing IoT to track critical parameters such as temperature, creates a digital ledger, which, if violated, would immediately alter the terms of the engagement by the carrier and or supplier.

- Informed consent is a mandatory requirement for any trial in the U.S. and internationally. The use of blockchain will ensure all components of the consent process are compliant and adhered to.

Have We Put The Cart Before The Horse?

One of pharma’s greatest foibles as an industry has been the penchant to focus on the wrong things. We saw this with process analytical technology (PAT), where we focused on the design and implementation of the technology and ignored the impact of foundational material characterization and supplier control. We saw it with Lean and Six Sigma, where the emphasis on the tools and certifications in the absence of the cultural leadership components relegated these operational excellence philosophies to simply a suite of tools, rather than a holistic approach to business performance. Pharma 4.0 has the potential to fall into the same trap. The focus on technology in the absence of understanding the basic question to be answered can derail a cross-functional initiative in the blink of an eye.

There is no doubt society is becoming increasingly digitized, and this can be a good thing — with improved efficiency, enhanced quality, and better company compliance with ever-increasing, data-related regulatory requirements. There is no shortage of technologies, but choosing the one that is going to have the greatest positive impact on your company, in the area that you most need it, is an obvious crucial decision. With production data now available for the asking, executives rightly wonder about how to begin. Which data would be most beneficial? Which technologies would deliver the biggest return on investment for a company, given its unique circumstances? Which data leakage threats are causing the most pain? This last question has made the headlines with high-profile ransomware attacks on Merck that affected the company’s operations worldwide.

Industry confronted this basic question with its first foray into Big Data analytics. The first step to identifying a strategy and solution is to understand what success looks like. Is the resulting analysis intended to be predictive, descriptive, diagnostic, or prescriptive? The answer to that basic question will dictate the path forward and lead to the solutions to be considered.

This article has been adapted from the 2019 CPhI Annual Report.

References:

- R. Cross, Drug development success rates are higher than previously reported, https://cen.acs.org/articles/96/i7/Drug-development-success-rates-higher.html

About The Author:

Bikash Chatterjee is president and chief science officer for Pharmatech Associates. He has over 30 years’ experience in the design and development of pharmaceutical, biotech, medical device, and IVD products. His work has guided the successful approval and commercialization of over a dozen new products in the U.S. and Europe. Chatterjee is a member of the USP National Advisory Board and is the past-chairman of the Golden Gate Chapter of the American Society of Quality. He is the author of Applying Lean Six Sigma in the Pharmaceutical Industry and is a keynote speaker at international conferences. Chatterjee holds a B.A. in biochemistry and a B.S. in chemical engineering from the University of California at San Diego.

Bikash Chatterjee is president and chief science officer for Pharmatech Associates. He has over 30 years’ experience in the design and development of pharmaceutical, biotech, medical device, and IVD products. His work has guided the successful approval and commercialization of over a dozen new products in the U.S. and Europe. Chatterjee is a member of the USP National Advisory Board and is the past-chairman of the Golden Gate Chapter of the American Society of Quality. He is the author of Applying Lean Six Sigma in the Pharmaceutical Industry and is a keynote speaker at international conferences. Chatterjee holds a B.A. in biochemistry and a B.S. in chemical engineering from the University of California at San Diego.